A Tour of Machine Learning in Python¶

How to perform exploratory data analysis and build a machine learning pipeline.

In this tutorial I demonstrate key elements and design approaches that go into building a well-performing machine learning pipeline. The topics I'll cover include:

- Exploratory Data Analysis and Feature Engineering.

- Data Pre-Processing including cleaning and feature standardization.

- Dimensionality Reduction with Principal Component Analysis and Recursive Feature Elimination.

- Classifier Optimization via hyperparameter tuning and Validation Curves.

- Building a more powerful classifier through Ensemble Voting and Stacking.

Along the way we'll be using several important Python libraries, including scikit-learn and pandas, as well as seaborne for data visualization.

Our task is a binary classification problem inspired by Kaggle's "Getting Started" competition, Titanic: Machine Learning from Disaster. The goal is to accurately predict whether a passenger survived or perished during the Titanic's sinking, based on data such as passenger age, class, and sex. The training and test datasets are provided here.

I have chosen here to focus on the fundamentals that should be a part of every data scientist's toolkit. The topics covered should provide a solid foundation for launching into more advanced machine learning approaches, such as Deep Learning. For an intro to Deep Learning, see my notebook on building a Convolutional Neural Network with Google's TensorFlow API.

Contents¶

- 1.1 - Getting Started

- 1.1.a) - Importing the Data

- 1.1.b) - Data Completeness

- 1.1.c) - Thinking Up Front / Doing Your Homework!

- 1.1.d) - A Quick Glance at the Sorted CSV File

- 1.2 - Feature Engineering

- 1.2.a) - FamilySize, Surname, Title, and IsChild

- 1.2.b) - Grouping Families and Travellers

- 1.2.c) - Creating Bins for Age

- 1.2.d) - Logarithmic and 'Split' (Effective) Fare

- 1.3 - Univariate Feature Exploration

- 1.3.a) - Data Spread

- 1.3.b) - Univariate Plots

- 1.3.c) - The Role of Cabin

- 1.4 - Exploring Feature Relations

- 1.4.a) - Feature Correlations

- 1.4.b) - Typical Survivor Attributes

- 1.4.c) - Sex, Pclass, and IsChild

- 1.4.d) - FamilySize, Sex, and Pclass

- 1.4.e) - A Closer Look at PClass and Fare

- 1.4.f) - Survival vs Embarked, and its Relation to Pclass and Sex

- 1.4.g) - A Closer Look at GroupType and GroupSize

- 1.4.h) - The Impact of Age on Survival, Given Pclass and GroupSize

- 1.4.i) - Do Groups Tend to Survive or Perish Together?

- 1.5 - Summary of Key Findings

- 2.1 - Checking Data Consistency

- 2.1.a) - Title/Sex Inconsistencies

- 2.1.b) - Embarked/Fare Inconsistencies within Groups

- 2.1.c) - Age/Parch Inconsistencies

- 2.2 - Identifying Outliers

- 2.2.a) - Finding the Outliers

- 2.2.b) - What To Do With Our Outliers?

- 2.3 - Dealing with Missing Feature Entries

- 2.3.a) - Embarked and Fare

- 2.3.b) - Age

- 2.4 - Feature Normalization

- 2.4.a) - Converting Categorical Strings to Numbers

- 2.4.b) - Feature Scaling

3) Feature Selection and Dimensionality Reduction

- 3.1 - Getting Started

- 3.2 - Initial Performance on Full Feature Set

- 3.3 - Feature Importances of our Tree and Ensemble Classifiers

- 3.4 - Dimensionality Reduction via Recursive Feature Elimination (RFE)

- 3.5 - Dimensionality Reduction via Principal Component Analysis (PCA)

- 3.6 - Selecting a Feature Subset Based on our Exploratory Data Analysis

- 3.7 - Summary and Initial Comparison of Feature Subsets

Importing Python Libraries¶

# General Tools:

import math, os, sys # standard python libraries

import numpy as np

import pandas as pd # for dataframes

import itertools # combinatorics toolkit

import time # for obtaining computation execution times

from scipy import interp # interpolation function

# Data Pre-Processing:

from sklearn.preprocessing import StandardScaler # for standardizing data

from collections import Counter # object class for counting element occurences

# Machine Learning Classifiers:

from xgboost import XGBClassifier # xgboost classifier (http://xgboost.readthedocs.io/en/latest/model.html)

from sklearn.linear_model import LogisticRegression, SGDClassifier, Perceptron # linear classifiers

from sklearn.tree import DecisionTreeClassifier, ExtraTreeClassifier # decision tree classifiers

from sklearn.svm import SVC # support-vector machine classifier

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis # LDA classifier

from sklearn.ensemble import AdaBoostClassifier, BaggingClassifier, ExtraTreesClassifier, \

GradientBoostingClassifier, RandomForestClassifier, VotingClassifier

from sklearn.neighbors import KNeighborsClassifier # Nearest-Neighbors classifier

# Feature and Model Selection:

from sklearn.model_selection import StratifiedKFold # train/test splitting tool for cross-validation

from sklearn.model_selection import GridSearchCV # hyperparameter optimization tool via exhaustive search

from sklearn.model_selection import cross_val_score # automates cross-validated scoring

from sklearn.metrics import precision_score, recall_score, f1_score, roc_curve, auc # scoring metrics

from sklearn.feature_selection import RFE # recursive feature elimination

from sklearn.model_selection import learning_curve # learning-curve generation for bias-variance tradeoff

from sklearn.model_selection import validation_curve # for fine-tuning hyperparameters

from sklearn.pipeline import Pipeline

# Plotting:

import matplotlib

import matplotlib.pyplot as plt

import seaborn as sns

from statsmodels.graphics.mosaicplot import mosaic

# Manage Warnings:

import warnings

warnings.filterwarnings('ignore')

# Ensure Jupyter Notebook plots the figures in-line:

%matplotlib inline

1) Exploratory Data Analysis¶

1.1 - Getting Started¶

On April 15th 1912, the Titanic sank during her maiden voyage after colliding with an iceberg. Only 722 out of 2224 (32.5%) of its passengers and crew would survive. Such loss of life was in part due to the lack of sufficient numbers of lifeboats.

Though survival certainly involved an element of luck, some groups of people (e.g. women, children, the upper-class, etc.) may have been more likely to survive than others. Our goal is to use machine learning to predict which passengers survived the tragedy, based on factors such as gender, age, and social status.

For the Kaggle competition, we are to create a .csv submission file with two headers (PassengerId, Survived), and provide binary classification predictions for each passenger, where 1 = survived and 0 = deceased. The competition instructions and data are found here.

Data Dictionary¶

A few notes below about the meaning of the features in the raw dataset:

- Survival: 0 = False (Deceased), 1 = True (Survived).

- Pclass: Passenger ticket class; 1 = 1st (upper class), 2 = 2nd (middle class), 3 = 3rd (lower class).

- SibSp: Passenger's total number of siblings (including step-siblings) and spouses (legal) aboard the Titanic.

- Parch: Passenger's total number of parents or children (including stepchildren) aboard the Titanic.

- Embarked: Port of Embarkation, where C = Cherbourg, Q = Queenstown, S = Southampton.

- Age: Ages under 1 are given as fractions; if the age is estimated, it is in the form of xx.5.

a) Importing the Data¶

We will use Panda's dataframe structures for storing our data. As we explore our data and define new features, it will be useful to combine the training and test data into a single dataset.

df_train = pd.read_csv('./titanic-data/train.csv')

df_test = pd.read_csv('./titanic-data/test.csv')

dataset = pd.concat([df_train, df_test]) # combined dataset

test_Ids = df_test['PassengerId'] # identifiers for test set (besides survived=NaN)

df_train.head(5)

b) Data Completeness¶

Let us check upfront how complete our dataset is. Here we count the missing entries for each feature:

print('Training Set Dataframe Shape: ', df_train.shape)

print('Test Set Dataframe Shape: ', df_test.shape)

print('\nTotal number of entries in our dataset: ', dataset.shape[0])

print('\nNumber of missing entries in total dataset:')

print(dataset.isnull().sum())

Findings:

- Cabin data is missing for more than 75% of all passengers. This feature is too incomplete to include in our predictive models. However, we will still explore whether it offers any useful insights.

- We are missing about 20% of all age entries. We can attempt to impute or infer these missing entries based on average values or correlations with other features.

- We are missing two entries for Embarked, and one for Fare. We will impute these later.

c) Thinking Up Front / Doing Your Homework!¶

Gaining a bit more understanding of the problem context can provide several clues about the importance of variables, and help us make more sense of some of the relations that will be uncovered during our exploratory data analysis. So it's worth spending some time learning about what happened the night the Titanic sank (we can think of this as gaining some domain expertise). There are countless books, documentaries, and webpages dedicated to this subject. Here we highlight a few interesting facts:

- The Titanic's officers issued a "women and children first" order for evacuating passengers via lifeboats. However, there was no organized evacuation plan in place.

- There was in fact no general "abandon ship" order given by the captain, no public address system, and no lifeboat drill. Many passengers did not realize they were in any imminent danger, and some had to be goaded out of their cabins by members of the crew or staff.

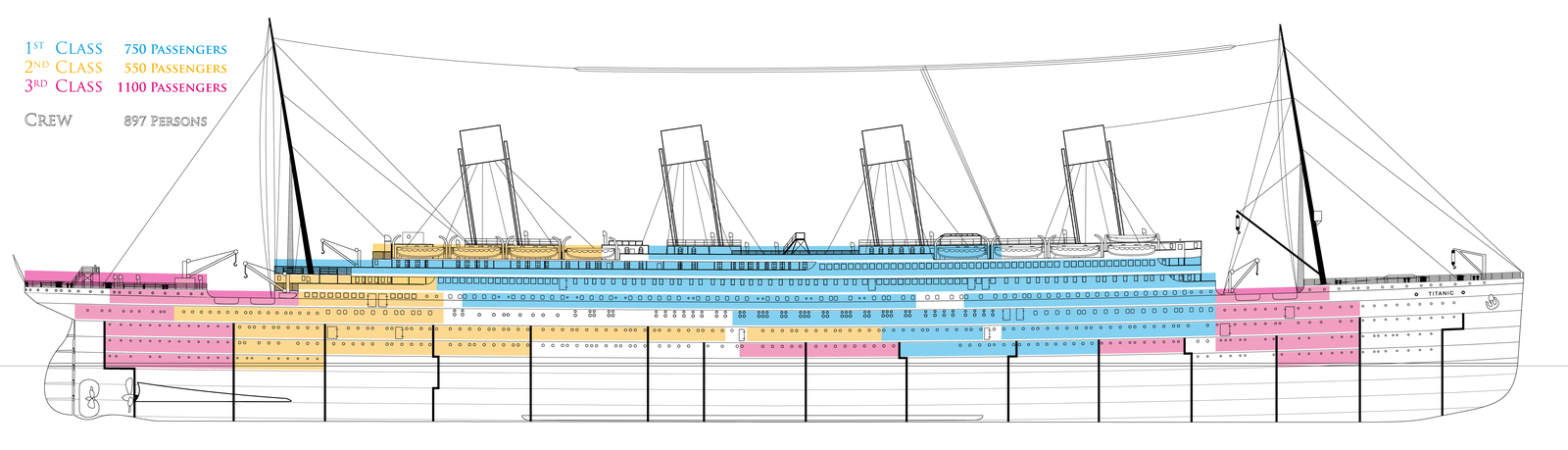

- Lifeboats were segregated into different class sections, and there were more 1st-class passenger lifeboats than for the other two classes.

- We know it was more difficult for 3rd class passengers to access lifeboats, because the 3rd-class passenger sections were gated off from the 1st and 2nd-class areas of the ship. This was actually due to US immigration laws, which required immigrants (primarily 3rd-class) to be segregated and processed separately from other passengers upon arrival to the US. As a consequence, 3rd-class passengers had to navigate through a maze of staircases and corridors to reach the lifeboat deck.

Given these facts, we can already surmize that Sex, Pclass, and Age are likely to be the most important features. We will see what trends our Exploratory Data Analysis reveals.

Conerning the Fare feature: Fare is given in Pounds Sterling. There were really no 'standard' fares - many factors influenced the price charged, including the cabin size and number of people sharing a cabin, whether it was on the perimeter of the ship (i.e. with a porthole) or further inside, the qualities of the furnishings and provisions, etc. Children travelled at reduced rates, as did servants with 1st-class tickets. There seemed also to have been some family discount rates given, but we lack detailed information on how this was calculated. However, our research does tell us that:

- Ticket price (Fare) was cumulative, and included the cost for all passengers sharing that ticket.

d) A Quick Glance at the Sorted CSV File¶

Several useful observations can be made by quickly glancing at the CSV file containing the combined training and test data, and sorting some of the entries. Don't underestimate the usefulness of this rather rudimentary step!

Findings:

- Sort by Passenger Name: Passengers with matching surnames tend to also have matching entries for several other features: Pclass, Ticket, Fare, and Embarked (in addition to Cabin when available). This tells us we can use matching Ticket and Fare information as a basis for grouping families. If we sort by ticket, we can use surnames to distinguish between 'family' groups and non-related co-travellers.

- Sort by Age: All entries with the title 'Master' in the name correspond to males under the age of 15. This can be useful in helping us impute missing age data.

- Sort by Cabin: We find that Cabin number is available for most passengers with PClass=1, but generally missing for passengers of Pclass=2 or 3.

- Sort by Ticket Names: (I.Tickets not containing letters.) Tickets with 4-digits correspond to Pclass 2 or 3; the vast majority of 5-digit tickets correspond to Pclass 1 or 2; for 6-digit and 7-digit tickets, the leading number matches the Pclass. (II. Tickets including letters.) Tickets beginning with A/4 correspond to passengers with embarked=S and PClass=3. Tickets beginning with C.A. or CA also correspond to embarked=S, with PClass of 2 or 3. All tickets beginning with PC correspond to PClass=1. These patterns might be useful for helping us spot inconsistencies in the data.

1.2 - Feature Engineering¶

As we explore our data, we will likely think of new features that may help us understand or predict Survival. The definition of new features from the initial feature set is a dynamic process that typically occurs in the midst of feature exploration. However, for organizational purposes, as we add new features we will return here to group their definitions upfront.

a) FamilySize, Surname, Title, and IsChild¶

- It makes sense to sum Parch and SibSp together to create a new feature FamilySize.

- When identifying families, it will also be useful to compare surnames directly, so we split Name into Surname and Title. Our quick scan of the CSV file showed that all male children 15 and under have the title 'Master', hence Title may be useful for helping us estimate missing Age values.

- We also create a new variable, IsChild, to denote passengers aged 15 and under.

# Create a new column as a sum of listed relatives

dataset['FamilySize'] = dataset['Parch'] + dataset['SibSp'] + 1 # plus one to include the passenger

# Clean and sub-divide the name data into two new columns using Python's str.split() function.

# A look at the CSV contents shows that we should first split the string at ', ' to isolate

# the surname, and then split again at '. ' to isolate the title.

dataset['Surname'] = dataset['Name'].str.split(', ', expand=True)[0]

dataset['Title'] = dataset['Name'].str.split(', ', expand=True)[1].str.split('. ', expand=True)[0]

# Create a new feature identifying children (15 or younger)

dataset['IsChild'] = np.where(dataset['Age'] < 16, 1, 0)

# We can save this for handling or viewing with external software

# dataset.to_csv('./titanic-data/combined_newvars_v1.csv')

# Now let's print part of the dataframe to check our new variable definitions...

dataset[['Name', 'Surname', 'Title', 'SibSp', 'Parch', 'FamilySize', 'Age', 'IsChild']].head(10)

b) Grouping Families and Travellers¶

Sorting the data by Ticket, one finds that multiple passengers share the same ticket number. This can be used as a basis for grouping passengers that travelled together. It will also be useful to distinguish whether these passenger groups are immediate-related (1st-degree) families, entirely unrelated or non-immediate (e.g. friends, cousins), or a mix. We will also identify passengers who are travelling alone. We define:

- GroupID: an integer label uniquely identifying each group; a surrogate to Ticket.

- GroupSize: total number of passengers sharing a ticket.

- GroupType: categorization of group into 'Family', 'Non-Family', 'Mixed', 'IsAlone'.

- GroupNumSurvived: number of members in that group which are known to have survived.

- GroupNumPerished: number of members in that group which are known to have perished.

# Create mappings for assigning GroupID, GroupType, GroupSize, GroupNumSurvived,

# and GroupNumPerished

group_id = 1

ticket_to_group_id = {}

ticket_to_group_type = {}

ticket_to_group_size = {}

ticket_to_group_num_survived = {}

ticket_to_group_num_perished = {}

for (ticket, group) in dataset.groupby('Ticket'):

# Categorize group type (Family, Non-Family, Mixed, )

num_names = len(set(group['Surname'].values)) # number of unique names in this group

group_size = len(group['Surname'].values) # total size of this group

if group_size > 1:

if num_names == 1:

ticket_to_group_type[ticket] = 'Family'

elif num_names == group_size:

ticket_to_group_type[ticket] = 'NonFamily'

else:

ticket_to_group_type[ticket] = 'Mixed'

else:

ticket_to_group_type[ticket] = 'IsAlone'

# assign group size and grouop identifier

ticket_to_group_size[ticket] = group_size

ticket_to_group_id[ticket] = group_id

ticket_to_group_num_survived[ticket] = group[group['Survived'] == 1]['Survived'].count()

ticket_to_group_num_perished[ticket] = group[group['Survived'] == 0]['Survived'].count()

group_id += 1

# Apply the mappings we've just defined to create the GroupID and GroupType variables

dataset['GroupID'] = dataset['Ticket'].map(ticket_to_group_id)

dataset['GroupSize'] = dataset['Ticket'].map(ticket_to_group_size)

dataset['GroupType'] = dataset['Ticket'].map(ticket_to_group_type)

dataset['GroupNumSurvived'] = dataset['Ticket'].map(ticket_to_group_num_survived)

dataset['GroupNumPerished'] = dataset['Ticket'].map(ticket_to_group_num_perished)

# Let's print the first 4 group entries to check that our grouping was successful

counter = 1

break_point = 4

feature_list = ['Surname', 'FamilySize','Ticket','GroupID','GroupType', 'GroupSize']

print('Printing Sample Data Entries to Verify Grouping:\n')

for (ticket, group) in dataset.groupby('Ticket'):

print('\n', group[feature_list])

if counter == break_point:

break

counter += 1

# Let's also check that GroupNumSurvived and GroupNumPerished were created accurately

feature_list = ['GroupID', 'GroupSize', 'Survived','GroupNumSurvived', 'GroupNumPerished']

dataset[feature_list].sort_values(by=['GroupID']).head(15)

Checking For Inconsistencies¶

# Check for cases where FamilySize = 1 but GroupType = Family

data_reduced = dataset[dataset['FamilySize'] == 1]

data_reduced = data_reduced[data_reduced['GroupType'] == 'Family']

# nri = 'NumRelatives inconsistency'

nri_passenger_ids = data_reduced['PassengerId'].values

nri_unique_surnames = set(data_reduced['Surname'].values)

# How many occurrences?

print('Number of nri Passengers: ', len(nri_passenger_ids))

print('Number of Unique nri Surnames: ',len(nri_unique_surnames))

# We will find that there are only 7 occurences, so let's go ahead and view them here:

data_reduced = data_reduced.sort_values('Name')

data_reduced[['Name', 'Ticket', 'Fare','Pclass', 'Parch',

'SibSp', 'GroupID', 'GroupSize','GroupType']].head(int(len(nri_passenger_ids)))

- With the exception of Mrs. John James Ware, we see that each of these passengers is paired with another having the same surname; we can presume that these are 2nd-degree relations (such as cousins), hence why each still has FamilySize=1 (which refers only to immediate family).

#Check for cases where FamilySize > 1 but GroupType = NonFamily

data_reduced = dataset[dataset['FamilySize'] > 1]

data_reduced = data_reduced[data_reduced['GroupType'] == 'NonFamily']

# ngwr = 'not grouped with relatives'

ngwr_passenger_ids = data_reduced['PassengerId'].values

ngwr_unique_surnames = set(data_reduced['Surname'].values)

# How many occurences?

print('Number of ngwr Passengers: ', len(ngwr_passenger_ids))

print('Number of Unique ngwr Surnames: ',len(ngwr_unique_surnames))

feature_list = ['PassengerId', 'Name', 'Ticket', 'Fare','Pclass', 'Parch',

'SibSp', 'GroupID', 'GroupSize','GroupType']

data_reduced[feature_list].sort_values('GroupID').head(int(len(ngwr_unique_surnames)))

If we look at matching group IDs, then in some cases these inconsistencies may be due to passenger substitutions. However, we need to better understand the significance of the names in parenthesis.

Consider GroupID=628: we have "Miss Elizabith Eustis" and "Mrs. Walter Sephenson (Martha Eustis)". A quick check of geneology databases online reports that there was indeed a miss Mrs. Walter Bertram Stephenson that boarded the Titanic; in this case, Martha Eustis is her maiden name, while Mrs. Walter B. Stephenson gives her title in terms of her husband's name (an old-fashioned practice). Another example is for GroupID=77, where we have "Brown, Mrs. John Murray (Caroline Lane Lamson)" and "Appleton, Mrs. Edward Dale (Charlotte Lamson)", another case of two related passengers whose names are given in terms of those of their husbands.

Since this hunt for inconsistencies turned up only 17 entries, we can manually correct the Group Type in cases (such as these two examples) where it is obvious the passengers are indeed family.

# manually correcting some mislabeled group types

# note: if group size is greater than the number of listed names above, we assign to Mixed

passenger_ids_toFamily = [167, 357, 572, 1248, 926, 1014, 260, 881, 592, 497]

passenger_ids_toMixed = [880, 1042, 276, 766]

dataset['GroupType'][dataset['PassengerId'].isin(passenger_ids_toFamily)] = 'Family'

dataset['GroupType'][dataset['PassengerId'].isin(passenger_ids_toMixed)] = 'Mixed'

## for verification:

# feature_list = ['PassengerId', 'Name', 'GroupID', 'GroupSize','GroupType']

# dataset[feature_list][dataset['PassengerId'].isin(

# passenger_ids_toFamily)].sort_values('GroupID').head(len(passenger_ids_toFamily))

LargeGroup Feature:

Lastly, we'll define a new feature, called LargeGroup, which equals 1 for GroupSize of 5 and up, and is 0 otherwise. For an explanation of what motivated this new feature, see our "Summary of Key Findings".

dataset['LargeGroup'] = np.where(dataset['GroupSize'] > 4, 1, 0)

c) Creating Bins for Age¶

During feature selection, we will assess whether this is advantageous over the continuous-variable representation.

# creation of Age bins; see Section 1.3-b

bin_thresholds = [0, 15, 30, 40, 59, 90]

bin_labels = ['0-15', '16-29', '30-40', '41-59', '60+']

dataset['AgeBin'] = pd.cut(dataset['Age'], bins=bin_thresholds, labels=bin_labels)

d) Logarithmic and 'Split' (Effective) Fare¶

Our research found that ticket price was cumulative based on the number of passengers sharing that ticket. We therefore define a new fare variable, 'SplitFare', that subdivides the ticket price based on the number of passengers sharing that ticket. We also create 'log10Fare' and 'log10SplitFare' to map these to a base-ten logarithmic scale.

# split the fare based on GroupSize; express as fare-per-passenger on a shared ticket

dataset['SplitFare'] = dataset.apply(lambda row: row['Fare']/row['GroupSize'], axis=1)

# Verify new feature definition

features_list = ['GroupSize', 'Fare', 'SplitFare']

dataset[features_list].head()

# Map to log10 scale

dataset['log10Fare'] = np.log10(dataset['Fare'].values + 1)

dataset['log10SplitFare'] = np.log10(dataset['SplitFare'].values + 1)

1.3 - Univariate Feature Exploration¶

a) Data Spread¶

We can use pandas' built-in methods to get a quick first impression of how our ordinal data are distributed:

dataset.describe()

Findings:

- Most of our passengers travelled without any relatives onboard. Less than 50% had FamilySize > 1.

- Less than 9% of our passengers were children.

- While most fares were under 15.00, it would appear there are passengers on board whose fare price (e.g. 512.00) are more than five standard deviations above this, implying a significant spread in passenger wealth. We will need to examine the Fare feature for outliers.

- Only 38.8% of the passengers in our training set survived. This gives us a baseline: if we simply predicted Survival=0 for all passengers, we could expect to achieve roughly 60% accuracy. Obviously we will aim to do much better than this using our machine learning models.

b) Univariate Plots¶

Let's begin by looking at how mean survival depends on individual feature values:

def barplots(dataframe, features, cols=2, width=10, height=10, hspace=0.5, wspace=0.25):

# define style and layout

sns.set(font_scale=1.5)

plt.style.use('seaborn-whitegrid')

fig = plt.figure(figsize=(width, height))

fig.subplots_adjust(left=None, bottom=None, right=None, top=None, wspace=wspace, hspace=hspace)

rows = math.ceil(float(dataframe.shape[1]) / cols)

# define subplots

for i, column in enumerate(dataframe[features].columns):

ax = fig.add_subplot(rows, cols, i + 1)

sns.barplot(column,'Survived', data=dataframe)

plt.xticks(rotation=0)

plt.xlabel(column, weight='bold')

feature_list = ['Sex','Pclass', 'Embarked', 'SibSp', 'Parch', 'FamilySize']

barplots(dataset, features=feature_list, cols=3, width=15, height=40, hspace=0.35, wspace=0.4)

We'll also consider the statistics associated with GroupType and GroupSize, which we defined in Section 1.2 (Feature Engineering) while grouping families and co-travellers with shared tickets:

feature_list = ['GroupType','GroupSize']

barplots(dataset, features=feature_list, cols=2, width=15, height=75, hspace=0.3, wspace=0.3)

Note that the black 'error bars' on our plots represent 95% confidence intervals. For practical purposes, when comparing survival versus feature values, these bars can be thought of as statistical uncertainties given our limited sample size and the spread in the data.

Findings:

- Sex and Pclass both show a strong statistically significant influence on survival.

- FamilySize of 2-4 is more advantageous than larger families or passengers without family. Survival drops sharply at FamilySize=5 and beyond.

- Embarked shows no clear trend; we will later investigate this feature in more detail.

- For GroupType, we can clearly see that lone passengers have a lower survival probability compared to other groups (note, this also mirrors what we see for FamilySize=1 and GroupSize=1). Between the other three categories of Family, NonFamily, and Mixed, the wide confidence bounds on the latter two make it difficult to assert whether any of these three have a statistically significant advantage relative to each other.

- For GroupSize, we see a trend similar to the one we observed for FamilySize, where survival increases up to GroupSize=4, and then drops off sharply for group sizes of 5 and above. However, compared to FamilySize, the confidence bounds for this variable are tighter, and the relation between survival and GroupSize up to 4 appears more linear, suggesting that GroupSize may be a better variable for model training than FamilySize.

Given the FamilySize feature, it is not clear whether SibSp and Parch are now gratuitous, or whether they can still offer some valuable insight. This will need further investigation.

Now let's examine Age and Fare:

def histograms(dataframe, features, force_bins=False, cols=2, width=10, height=10, hspace=0.2, wspace=0.25):

# define style and layout

sns.set(font_scale=1.5)

plt.style.use('seaborn-whitegrid')

fig = plt.figure(figsize=(width, height))

fig.subplots_adjust(left=None, bottom=None, right=None, top=None, wspace=wspace, hspace=hspace)

rows = math.ceil(float(dataframe.shape[1]) / cols)

# define subplots

for i, column in enumerate(dataframe[features].columns):

ax = fig.add_subplot(rows, cols, i + 1)

df_survived = dataframe[dataframe['Survived'] == 1]

df_perished = dataframe[dataframe['Survived'] == 0]

if force_bins is False:

sns.distplot(df_survived[column].dropna().values, kde=False, color='blue')

sns.distplot(df_perished[column].dropna().values, kde=False, color='red')

else:

sns.distplot(df_survived[column].dropna().values, bins=force_bins[i], kde=False, color='blue')

sns.distplot(df_perished[column].dropna().values, bins=force_bins[i], kde=False, color='red')

plt.xticks(rotation=25)

plt.xlabel(column, weight='bold')

feature_list = ['Age', 'Fare']

bins = [range(0, 81, 1), range(0, 300, 2)]

histograms(dataset, features=feature_list, force_bins=bins, cols=2, width=15, height=70, hspace=0.3, wspace=0.2)

Blue denotes survivors; red denotes those who perished.

Findings:

- Age: Survival improves for children. Age-dependent differences in survival are perhaps easier to see in a kernel density estimate (KDE) plot (see below). Elderly passengers, around age 60 and up, tended to perish.

- Fare: Unsurpisingly passengers with the lowest fares perished in greater numbers. Fare values are indeed widely spread; we see many passengers with fares exceeding 50.00 and 100.00. It may be sensible to convert fare into a logarithmic value to explore its relation to survival.

Below we generate KDE plots and convert Fare to a base-10 log scale. We will also examine the 'SplitFare' variable, which divides the total ticket Fare price among the number of passengers sharing that ticket:

def univariate_kdeplots(dataframe, plot_features, cols=2, width=10, height=10, hspace=0.2, wspace=0.25):

# define style and layout

sns.set(font_scale=1.5)

plt.style.use('seaborn-whitegrid')

fig = plt.figure(figsize=(width, height))

fig.subplots_adjust(left=None, bottom=None, right=None, top=None, wspace=wspace, hspace=hspace)

rows = math.ceil(float(dataframe.shape[1]) / cols)

# define subplots

for i, feature in enumerate(plot_features):

ax = fig.add_subplot(rows, cols, i + 1)

g = sns.kdeplot(dataframe[plot_features[i]][(dataframe['Survived'] == 0)].dropna(), shade=True, color="red")

g = sns.kdeplot(dataframe[plot_features[i]][(dataframe['Survived'] == 1)].dropna(), shade=True, color="blue")

g.set(xlim=(0 , dataframe[plot_features[i]].max()))

g.legend(['Perished', 'Survived'])

plt.xticks(rotation=25)

ax.set_xlabel(plot_features[i], weight='bold')

feature_list = ['Age', 'log10Fare', 'log10SplitFare']

univariate_kdeplots(dataset, feature_list, cols=1, width=15, height=100, hspace=0.4, wspace=0.25)

We can more clearly see:

- Children have a survival advantage, particularly those 13 and under.

- Elderly passengers ~60 and up are most likely to perish.

- Survival is poorest for Fares of 10.0 or less ($\lt$ 1.0 on this log scale).

- The Fare KDE plot shows 2 clear points of concavity near log(Fare)=0.9 and log(Fare)=1.4, and possibly a third near log(Fare)=1.8. For Splitfare, the variance on the peaks appear smaller, and there seem to be 3 main peaks, possibly corresponding to the means for Pclasses 3, 2 and 1. It should be investigated how Fare and SplitFare is distributed among different Pclasses.

- We note that there appear several passengers whose Fare is listed as 0.0, virtually all of whom perished.

Let's now consider discretized Age Bins and see if this makes any of the Age-related trends clearer. First we'll group age into bins of 5 years:

dataset['AgeBin_v1'] = pd.cut(dataset['Age'], bins=range(0, 90, 5))

sns.set(rc={'figure.figsize':(14,8.27)})

sns.set(font_scale=1.0)

plt.style.use('seaborn-whitegrid')

g = sns.barplot('AgeBin_v1','Survived', data=dataset)

table = pd.crosstab(dataset['AgeBin_v1'], dataset['Survived'])

print('\n', table)

Findings (complementary to KDE plot):

- Survival tends to be highest in the 0-15 age group.

- There is a slight increase in survival between 30-40 relative to adjacent bins.

- Survival generally decreases after age 60; however, we have fewer samples upon which to base our statistics.

- However, due to the large variances on all bars, these findings need to be taken with some caution.

Let's use these trends to custom-define a new set of bins with reduced granulation (see Section 1.2-a for the 'AgeBin' variable definition):

sns.set(font_scale=1.5)

plt.style.use('seaborn-whitegrid')

g = sns.barplot('AgeBin','Survived', data=dataset)

This makes it even clearer that being a child (0-15) is a statistically significant predictor of higher survival. Let's focus on our 'IsChild' variable, which equals 1 for Ages 15 and under, otherwise 0.

barplots(dataset, features=['IsChild'], cols=1, width=5, height=100, hspace=0.3, wspace=0.2)

Findings:

- Children do indeed have significantly higher survival than non-children, as we might expect from the "women and children first" evacuation order. However, the survival probability (60%) is still quite a bit lower than that of Sex='female' (~73%) and Pclass=1 (~62.5%). There are clearly other features that play an important role in the likelihood of a child surviving, which we'll explore in Section 1.4.

What about discretizing log10 Fare into bins? We do this below.

sns.set(rc={'figure.figsize':(14,8.27)})

sns.set(font_scale=1.5)

plt.style.use('seaborn-whitegrid')

dataset['FareBin'] = pd.cut(dataset['log10Fare'], bins=5)

g = sns.barplot('FareBin','Survived', data=dataset)

Findings:

- There is an approximately linear increase in survival probability with increasing FareBin. Could this in fact provide better predictive granularization than Pclass? We can test this idea later during Feature Selection.

Does this trend hold true if we look at the log10 SplitFare variable?

sns.set(font_scale=1.0)

plt.style.use('seaborn-whitegrid')

dataset['SplitFareBin'] = pd.cut(dataset['log10SplitFare'], bins=5)

g = sns.barplot('SplitFareBin','Survived', data=dataset)

Unfortunately the nice linear trend doesn't quite hold up. Bins 2-3 are nearly identical; similarly for bins 4-5. This looks more like a replication of the Pclass dependencies. What if we plot again with more bins?

sns.set(font_scale=1.0)

plt.style.use('seaborn-whitegrid')

dataset['SplitFareBin'] = pd.cut(dataset['log10SplitFare'], bins=10)

g = sns.barplot('SplitFareBin','Survived', data=dataset)

The linearity is somewhat better but not great, and we also have relatively low confidences for our highest two bins.

c) The Role of Cabin¶

Can information about passenger Cabin be useful for predicting survival? Initially, one might think that passengers in cabins located more deeply within the Titanic are more likely to perish. The cabins listed in our passenger data are prefixed with the letters A through G. Some background research tells us that this letter corresponds to the deck on which the cabin was located (with A being closer to the top, G being deeper down). There also appears to be a single cabin entry beginning with the letter 'T', but it's not clear what this means, so we will omit it.

Let's take a look at whether the deck the cabin was located on had any significant influence on survival:

dataset['CabinDeck'] = dataset.apply(lambda row:

str(row['Cabin'])[0] if str(row['Cabin'])[0] != 'n'

else row['Cabin'], axis=1)

sns.set(font_scale=1.5)

plt.style.use('seaborn-whitegrid')

g = sns.barplot('CabinDeck','Survived', data=dataset[dataset['CabinDeck'] != 'T'].sort_values('CabinDeck'))

Findings:

- CabinDeck appears to have no significant influence on survival.

A few other comments:

- The cabin data we have exists predominantly for members of Pclass=1; it is missing for nearly all other passengers.

- We know that gates in some of the stairwells leading to higher decks were left shut, due to the segregation of 3rd-class passengers from the rest. This of course mainly impacted survival in Pclass=3.

- The iceberg penetrated the Titanic below deck G. Hence, there wasn't any localized damage/flooding directly to any of the cabins we are considering.

- Lower-class passengers were not necessarily on lower decks than higher-class passengers. The following schematic of passenger class cabin distributions shows that most decks contained a mix of classes, albeit 3rd-class passengers tended to be far towards the rear or front of the ship:

Conclusion:

- Cabin is not a useful feature for survival prediction. Having a known cabin number basically just tells us that the passenger is Pclass=1, and is therefore redundant.

1.4 - Exploring Feature Relations¶

We now proceed with a multivariate analysis to explore how different features influence each other's impact on passenger survival.

a) Feature Correlations¶

Let's first look at correlations between survival and some of our existing numerical features:

feature_list = ['Survived', 'Pclass', 'Fare', 'SplitFare', 'Age', 'SibSp', 'Parch', 'FamilySize', 'GroupSize']

plt.figure(figsize=(18,14))

sns.set(font_scale=1.5)

g = sns.heatmap(dataset[np.isfinite(dataset['Survived'])][feature_list].corr(),

square=True, annot=True, cmap='coolwarm', fmt='.2f')

Findings:

- Pclass and SplitFare both show significant correlation with survival, unsurprisingly.

- There is some negative correlation between Age and FamilySize. This makes sense, as we might expect larger families to consist of more children, thereby lowering the mean age.

- There is some negative correlation between Age and Pclass. This suggests that upper-class passengers (PClass=1) are generally older than lower-class ones.

- Unsurprisingly Sibsp, Parch, and FamilySize are all strongly correlated with each other.

- GroupSize appears to correlate positively with Fare, but has near-zero correlation with SplitFare, further indicative that Fare is cumulative and represents the total paid for all passengers sharing a ticket.

In our univariate plots we saw that FamilySize had a clear impact on survival, yet here the correlations between Survival and FamilySize are near-zero. This may be explained by the fact that the positive trend between survival and FamilySize reverses into a negative trend after FamilySize=4. A similar reason may be behind the fact that Survival shows little correlation with Age, yet we know Age < 15 (i.e. child) is an advantage.

At this point it is also common to examine 'pair plots' of variables. However, given that most of our features span only a few discrete values, this turns out to be of limited informative value for us, and is hence omitted.

b) Typical Survivor Attributes¶

We can easily check which combinations of nominal features give the best and worst survival probabilities. However, this is limited by small sample size for some of our feature combination subsets. Nonetheless it can be useful for benchmarking purposes. We limit this quick exercise to only a few features (if we specify too many, our sample sizes for each combination become too small).

def get_mostProbable(data, feature_list, feature_values):

high_val, low_val = 0, 1

high_set, low_set = [], []

high_size, low_size = [], []

for combo in itertools.product(*feature_values):

subset = dataset[dataset[feature_list[0]] == combo[0]]

for i in range(len(feature_list))[1:]:

subset = subset[subset[feature_list[i]] == combo[i]]

mean_survived = subset['Survived'].mean()

if mean_survived > high_val:

high_set = combo

high_val = mean_survived

high_size = subset.shape[0]

if mean_survived < low_val:

low_set = combo

low_val = mean_survived

low_size = subset.shape[0]

print('\n*** Most likely to survive ***')

for i in range(len(feature_list)):

print('%s : %s' % (feature_list[i], high_set[i]))

print('... with survival probability %.2f' % high_val)

print('and total set size %s' % high_size)

print('\n*** Most likely to perish ***')

for i in range(len(feature_list)):

print('%s : %s' % (feature_list[i], low_set[i]))

print('... with survival probability %.2f' % low_val)

print('and total set size %s' % low_size)

An examination of the best and worst combinations of 'Sex' and 'Pclass':

feature_list = ['Pclass', 'Sex']

feature_values = [[1, 2, 3], ['male', 'female']] # ['Pclass', 'Sex']

get_mostProbable(dataset, feature_list, feature_values)

Findings:

- Virtually all 1st-class females survived (97%).

- 3rd-class males where the most likely to perish, with only 14% survival.

This isn't too surprising, although the associated survival probabilities are useful to note.

What if we also consider whether the passenger was a child?

feature_list = ['Pclass', 'Sex', 'IsChild']

feature_values = [[1, 2, 3], ['male', 'female'], [0, 1]] # ['Pclass', 'Sex', 'IsChild']

get_mostProbable(dataset, feature_list, feature_values)

Findings:

- Male rather than female first-class children are most likely to survive (but note that we have only 5 samples in this subset).

- Males in Pclass=2 seem to fare worse than Pclass=3 when we delineate between adults and children.

c) Sex, Pclass, and IsChild¶

Let's take a closer look at how survival differs between male and female across all three passenger classes:

plt.figure(figsize=(10,5))

plt.style.use('seaborn-whitegrid')

g = sns.barplot(x="Pclass", y="Survived", hue="Sex", data=dataset)

Findings:

- Males in Pclass 2 and 3 have almost the same survival probability. Survival roughly doubles when going to Pclass 1.

- Females in Pclass 2 are almost as likely to survive as those in Pclass 1; however, survival drops sharply (by ~40%) for Pclass 3.

Now let's see how this subdivides between children and adults:

subset = dataset[dataset['IsChild'] == 0]

table = pd.crosstab(subset[subset['Sex']=='male']['Survived'], subset[subset['Sex']=='male']['Pclass'])

print('\n*** i) Adult Male Survival ***')

print(table)

table = pd.crosstab(subset[subset['Sex']=='female']['Survived'], subset[subset['Sex']=='female']['Pclass'])

print('\n*** ii) Adult Female Survival ***')

print(table)

subset = dataset[dataset['IsChild'] == 1]

table = pd.crosstab(subset[subset['Sex']=='male']['Survived'], subset[subset['Sex']=='male']['Pclass'])

print('\n*** iii) Male Child Survival ***')

print(table)

table = pd.crosstab(subset[subset['Sex']=='female']['Survived'], subset[subset['Sex']=='female']['Pclass'])

print('\n*** iv) Female Child Survival ***')

print(table)

g = sns.factorplot(x="IsChild", y="Survived", hue="Sex", col="Pclass", data=dataset, ci=95.0)

# ci = confidence interval (set to 95%)

Findings:

Males:

- Virtually all of the male children in our training dataset survive in Pclasses 1 and 2.

- The IsChild feature clearly has a significant impact on survival likelihood (well above 50%) for Pclass 1 and 2; for Pclass 3, this difference is a more modest 20%.

Females:

- All but one of the Pclass 1 and 2 female children survived.

- Survival of a female child versus an adult is only modestly better (by perhaps 5%).

- The vast majority of female passengers who perished were in Pclass=3 (see table ii).

d) FamilySize, Sex, and Pclass¶

We saw earlier that FamilySize improves survival up to FamilySize=4, then sharply drops. Does Sex have any influence on this trend?

g = sns.factorplot(x="FamilySize", y="Survived", hue="Sex", data=dataset, ci=95.0)

# ci = confidence interval (set to 95%)

plt.style.use('seaborn-whitegrid')

Findings:

- Increase in Survival with FamilySize (up to 4) is greater for males than females.

- Both sexes follow the same general trend otherwise.

It would be interesting to know the role Pclass plays in FamilySize survival:

# Class distribution for a given FamilySize

table = pd.crosstab(dataset['FamilySize'], dataset['Pclass'])

print('\n', table)

table_fractions = table.div(table.sum(1).astype(float), axis=0)

g = table_fractions.plot(kind="bar", stacked=True)

plt.xticks(rotation=0)

plt.xlabel('FamilySize', weight='bold')

plt.ylabel('Fraction of Total')

plt.tight_layout()

leg = plt.legend(title='Pclass', loc=9, bbox_to_anchor=(1.05, 1.0))

# Comparison of surival

g = sns.factorplot(x="FamilySize", y="Survived", hue="Pclass", data=dataset, ci=95.0)

Findings:

- FamilySize > 5 were predominantly 3rd class.

- FamilySize = 4 was predominantly 1st and 2nd class.

- Pclass 1 breaks from the linear trend early, dipping below Pclass=2 for FamilySize=4.

Finally, let's look at how the various FamilySizes are split between the two sexes:

# Sex distribution for a given FamilySize

table = pd.crosstab(dataset['FamilySize'], dataset['Sex'])

print('\n', table)

table_fractions = table.div(table.sum(1).astype(float), axis=0)

g = table_fractions.plot(kind="bar", stacked=True)

plt.xticks(rotation=0)

plt.xlabel('FamilySize', weight='bold')

plt.ylabel('Fraction of Total')

plt.tight_layout()

leg = plt.legend(title='Sex', loc=9, bbox_to_anchor=(1.05, 1.0))

Findings:

- The proportion of males is greatest for FamilySize=1, and begins to drop for FamilySizes of 2, 3, and 4. Hence, the trend we observed earlier of FamilySizes 2, 3 and 4 becoming increasingly advantageous to survival might in part be driven by the Sex feature.

- For FamilySize > 4, we cannot associate the lower survival rates to a higher proportion of males, as the sexes are mixed and slightly in favour of females.

e) A Closer Look at PClass and Fare¶

We know that fare and Pclass are strongly correlated, and that Pclass on its own is a strong predictor of survival. Our main question is: can the distribution of Fares within each Pclass offer better granulization about survival?

First let's examine how the fares are distributed:

sns.set(font_scale=1.5)

plt.style.use('seaborn-whitegrid')

fig = plt.figure(figsize=(14, 5))

fig.subplots_adjust(left=None, bottom=None, right=None, top=None, wspace=0.3, hspace=0.5)

ax = fig.add_subplot(1, 2, 1)

ax = sns.boxplot(x="Pclass", y="Fare", hue="Survived", data=dataset)

ax.set_yscale('log')

ax = fig.add_subplot(1, 2, 2)

ax = sns.boxplot(x="Pclass", y="SplitFare", hue="Survived", data=dataset)

ax.set_yscale('log')

Findings:

- SplitFare provides less variance and better separation between Pclasses.

let's also examine Fare and SplitFare price versus GroupSize within each Pclass:

# Plots for Fare

sns.set(font_scale=1.5)

plt.style.use('seaborn-whitegrid')

fig = plt.figure(figsize=(14, 3))

fig.subplots_adjust(left=None, bottom=None, right=None, top=None, wspace=0.35, hspace=0.5)

for i, Pclass_ in enumerate([1, 2, 3]):

ax = fig.add_subplot(1, 3, i + 1)

ax = sns.pointplot(x='GroupSize', y='Fare', data=dataset[dataset['Pclass'] == Pclass_])

plt.title('Pclass = %s' % Pclass_)

# Plots for SplitFare

sns.set(font_scale=1.5)

plt.style.use('seaborn-whitegrid')

fig = plt.figure(figsize=(14, 3))

fig.subplots_adjust(left=None, bottom=None, right=None, top=None, wspace=0.35, hspace=0.5)

for i, Pclass_ in enumerate([1, 2, 3]):

ax = fig.add_subplot(1, 3, i + 1)

ax = sns.pointplot(x='GroupSize', y='SplitFare', data=dataset[dataset['Pclass'] == Pclass_])

plt.title('Pclass = %s' % Pclass_)

Findings:

- Fare scales positively with Groupsize, whereas in SplitFare this positive trend disappears. (As we might expect, since the impact of GroupSize on Fare has been normalized out for SplitFare.)

- In SplitFare for Pclass=3, there appears to be a decreasing trend up to GroupSize=4. It is possible this is due partially to fare discounts applied to families in that passenger class.

def KDE_feature_vs_Pclass(dataframe, feature,

width=10, height=10, hspace=0.2, wspace=0.25):

# define style and layout

cols=3

sns.set(font_scale=1.5)

plt.style.use('seaborn-whitegrid')

fig = plt.figure(figsize=(width, height))

fig.subplots_adjust(left=None, bottom=None, right=None, top=None, wspace=wspace, hspace=hspace)

rows = math.ceil(float(dataframe.shape[1]) / cols)

# define subplots

for i, pclass in enumerate(['Pclass1', 'Pclass2', 'Pclass3']):

ax = fig.add_subplot(rows, cols, i + 1)

df_subset = dataframe[dataframe['Pclass'] == i+1]

g = sns.kdeplot(df_subset[feature][df_subset['Survived'] == 0].dropna(), shade=True, color="red")

g = sns.kdeplot(df_subset[feature][(df_subset['Survived'] == 1)].dropna(), shade=True, color="blue")

g.set(xlim=(df_subset[feature].min() , df_subset[feature].max()))

g.legend(['Perished', 'Survived'])

plt.xticks(rotation=25)

plt.title(pclass)

ax.set_xlabel(feature, weight='bold')

KDE_feature_vs_Pclass(dataset, 'log10Fare', width=20, height=50, hspace=0.2, wspace=0.25)

KDE_feature_vs_Pclass(dataset, 'log10SplitFare', width=20, height=50, hspace=0.2, wspace=0.25)

Findings:

- Fare does appear to be a good predictor of survival within each Pclass, suggesting it provides better granulation than Pclass alone.

- For SplitFare, on the other hand, higher fares within each Pclass no longer appear to yield higher survival. In fact, for Pclass 2 and 3, we see the opposite. Why? Consider the higher survival at the lower values of SplitFare. This could be attributable to the fact that GroupSizes 2-4, which we found earlier to have higher survival rates, generally tended to have lower values of SplitFare, possibly due to family fare discounts.

These findings imply that any predictive value Fare has is derived predominantly from GroupSize. The amount of redundancy between Fare, Pclass, and GroupSize will be better elucidated through the dimensionality reduction techniques applied in Section 2.

f) Survival vs Embarked, and its Relation to Pclass and Sex¶

Recall from our univariate plots that passengers embarking at Cherbourg appeared to have a higher survival probability than those embarking at the other two ports. Let's investigate this in greater detail. First let's examine the Pclass distributions of passengers at each port.

table = pd.crosstab(dataset['Embarked'], dataset['Pclass'])

print(table)

table_fractions = table.div(table.sum(1).astype(float), axis=0)

g = table_fractions.reindex(['S', 'C', 'Q']).plot(kind="bar", stacked=True)

plt.xlabel('Embarked')

plt.ylabel('Fraction of Total')

plt.tight_layout()

leg = plt.legend(title='Pclass', loc=9, bbox_to_anchor=(1.1, 1.0))

Findings:

- Unsurprisingly, Cherbourg had the largest proprotion of Pclass=1 passengers.

- Interestingly, univariate survival probability for S and Q were roughly equal, yet we see here that S had a significantly larger fraction of passengers that were Pclass 1 and 2. Let's explore this in more detail by looking at Pclass survival rates for each embarkment port.

plt.figure(figsize=(10,5))

g = sns.barplot(x="Embarked", y="Survived", hue="Pclass", data=dataset)

We see that survial of passengers in PClass 3 was higher in both C and Q than it was in S. Could this be due to differences in the fraction of male/female passengers?

data_subset = dataset[dataset['Pclass']==3]

table = pd.crosstab(data_subset['Embarked'], data_subset['Sex'])

print('\n', table)

table_fractions = table.div(table.sum(1).astype(float), axis=0)

g = table_fractions.reindex(['S', 'C', 'Q']).plot(kind="bar", stacked=True)

plt.xlabel('Embarked')

plt.ylabel('Fraction of Total')

plt.title('Sex of Passengers in Pclass=3')

plt.tight_layout()

leg = plt.legend(title='Sex', loc=9, bbox_to_anchor=(1.2, 1.0))

So we see that more of the third class passengers embarking at S were male, but only by a small margin compared to C; that is probably not enough to explain the lower survival rate, so let's explore further:

g = sns.factorplot(x='Pclass', y='Survived', hue='Sex', col='Embarked', data=dataset, ci=95.0)

# ci = confidence interval (set to 95%)

What's interesting is that we see that third-class female passengers had a much lower survival probability if they embarked at S compared to both C and Q. This could partly explain why survival for Pclass 3 is lower for S than Q. But why would third class females be less likely to survive for Embarked = S ?

data_subset = dataset[dataset['Pclass']==3]

data_subset = data_subset[data_subset['Sex']=='female']

table = pd.crosstab(data_subset['Embarked'], data_subset['FamilySize'])

print('\n', table)

table_fractions = table.div(table.sum(1).astype(float), axis=0)

g = table_fractions.reindex(['S', 'C', 'Q']).plot(kind="bar", stacked=True, colormap='coolwarm')

plt.xlabel('Embarked')

plt.ylabel('Fraction of Total')

plt.title('FamilySize of Females in Pclass=3')

plt.tight_layout()

leg = plt.legend(title='FamilySize', loc=9, bbox_to_anchor=(1.2, 1.2))

It's possible that the lower third-class female survival rate for Embarked=S is related to the fact that a larger fraction of females in S have a large FamilySize (5 or higher), which we know has overall poorer survival rates.

What about passenger age? Let's look at how Age was distributed among Female passengers at each port of embarkment.

plt.figure(figsize=(10,5))

g = sns.violinplot(x="Embarked", y="Age", hue="Survived",

data=data_subset, split=True, palette='Set1', title='Female Passenger Age Distributions')

So another clue is that we see S had more female children, but surprisingly a larger proportion of these children died compared to C and Q. So let's look at survival of third-class female passengers under the age of 16 (e.g. children).

# survival of third-class female children

data_subset = data_subset[data_subset['Age'] < 16]

table = pd.crosstab(data_subset['Embarked'], data_subset['Survived'])

print('\n', table)

table_fractions = table.div(table.sum(1).astype(float), axis=0)

g = table_fractions.reindex(['S', 'C', 'Q']).plot(kind="bar", stacked=True,

colormap='coolwarm_r')

plt.xlabel('Embarked')

plt.ylabel('Fraction of Total')

plt.title('Survival of Female Children in Pclass=3')

plt.tight_layout()

leg = plt.legend(title='Survived', loc=9, bbox_to_anchor=(1.2, 1.0))

Q had only one female child and she survived. C had a small number, 11, of which 9 survived. In S, only one third survived. This is very surprising as this is well below the univariate female survival rate of 70%, and total pclass=3 female survival rate of 50%.

How does this compare against family size? We'll include male children in our plots, as a benchmark:

data_subset = dataset[dataset['Pclass']==3]

data_subset = data_subset[data_subset['Age'] < 16]

g = sns.factorplot(x="FamilySize", y="Survived", hue="Sex",

col="Embarked", data=dataset, ci=95.0)

print('Survival of Children Under 16, Pclass=3')

So the drop in survival for female children indeed seems to be tied to FamilySize. By now it appears that the FamilySize feature is responsible for the lower survival for PClass=3 in emabarked=S, as it is somehow tied to a larger fraction of the female passengers perishing.

g) A Closer Look at GroupType and GroupSize¶

Let's examine how Survival vs. GroupSize might vary with GroupType:

# focus only on groupsize < 5

plt.figure(figsize=(8,5))

g = sns.barplot(x="GroupSize", y="Survived", hue="GroupType", data=dataset[dataset['GroupSize'] < 5], palette='Set1')

plt.tight_layout()

leg = plt.legend(title='GroupType', loc=9, bbox_to_anchor=(1.15, 1.0))

We notice:

- For GroupSize=2, it is clearly advantageous to belong to a Family group rather than Non-Family.

It would also be interesting to explore the relationships GroupSize and GroupType have with other variables such as Sex, PClass, and Embarked. Some questions we ask:

- Are there differences in which GroupTypes and GroupSizes men and women tend to belong to?

- Do larger groups (the ones with poorer survival) tend to be in a higher or lower passenger class?

- What class do our lone travellers tend to belong to, and where do they typically embark?

# Sex distribution across GroupSize

table = pd.crosstab(dataset['GroupSize'], dataset['Sex'])

print('\n', table)

table_fractions = table.div(table.sum(1).astype(float), axis=0)

g = table_fractions.plot(kind="bar", stacked=True)

plt.xticks(rotation=0)

plt.xlabel('GroupSize', weight='bold')

plt.ylabel('Fraction of Total')

plt.tight_layout()

leg = plt.legend(title='Sex', loc=9, bbox_to_anchor=(1.15, 1.0))

# Sex distribution across GroupType

table = pd.crosstab(dataset['GroupType'], dataset['Sex'])

print('\n', table)

table_fractions = table.div(table.sum(1).astype(float), axis=0)

g = table_fractions.plot(kind="bar", stacked=True)

plt.xticks(rotation=0)

plt.xlabel('GroupType', weight='bold')

plt.ylabel('Fraction of Total')

plt.tight_layout()

leg = plt.legend(title='Sex', loc=9, bbox_to_anchor=(1.15, 1.0))

Findings:

- Our proportions of male vs. female passengers for each GroupSize mirrors what we have already found for FamilySize.

- As with FamilySize, we see that the male proportion of GroupSize tends to decrease between 1 and 4. This time we also see it clearly begin increasing again for GroupSizes 5 and higher. Since we know males in general have a much lower survival probability than females, this is seems to be at least partially responsible for the trends seen earlier for GroupSize. However, it is clearly not the whole story, as we see we still have a sizable female fraction for GroupSizes > 4 even though those groups experienced a sharp dropoff in surival probability. The lower survival at higher GroupSizes might be driven by those larger groups being predominantly of lower class, which we'll investigate soon.

- Unsurprisingly, the majority of lone passengers are male.

- Females have a slightly larger presence in Family groups compared to mixed and non-Family.

Let's look at female and male survival as a function of GroupType and GroupSize:

g = sns.factorplot(x="GroupSize", y="Survived", hue="GroupType", col="Sex",

data=dataset[dataset['GroupType'] != 'IsAlone'], ci=95.0)

Findings:

- Interestingly, survival is best for females in Mixed groups, closely followed by Non-Family and then Family groups. Survival plummets at a GroupSize of 5 (except for the mixed case, which plummets at 6).

- At low GroupSizes, males do significantly better in Family groups than they do Non-Family groups.

Now let's look at the distribution of passenger classes:

# Class distribution for a given GroupSize

table = pd.crosstab(dataset['GroupSize'], dataset['Pclass'])

print('\n', table)

table_fractions = table.div(table.sum(1).astype(float), axis=0)

g = table_fractions.plot(kind="bar", stacked=True)

plt.xticks(rotation=0)

plt.xlabel('GroupSize', weight='bold')

plt.ylabel('Fraction of Total')

plt.tight_layout()

leg = plt.legend(title='Pclass', loc=9, bbox_to_anchor=(1.15, 1.0))

We find:

- GroupSize=1 (GroupType = IsAlone) tends to predominantly be 3rd-class passengers (hence Pclass, in addition to the large fraction of males, may be responsible for the low survival probability we found for IsAlone).

- For GroupSize 2-4, a comparatively smaller fraction of passengers belong to 3rd class.

This raises the question: to what extent is Pclass responible for the variation in survival among different GroupSizes?

# Survival Probability for GroupSize < 5 Given Pclass

plt.figure(figsize=(8,5))

g = sns.barplot(x="GroupSize", y="Survived", hue="Pclass", data=dataset[dataset['GroupSize'] < 5], palette='Set1')

plt.tight_layout()

leg = plt.legend(title='Pclass', loc=9, bbox_to_anchor=(1.15, 1.0))

Findings:

- We see that for each Pclass we retain a roughly linear trend of increasing survival with increasing GroupSize up to 4; at the same time, within a given GroupSize, there is also consistently a linear trend between surival and Pclass.

So is GroupSize a useful feature in its own right, or are its trends derivative from other features? There's one more thing to check: earlier we found that a higher fraction of males at lower GroupSizes could also be influencing the GroupSize survival trends. So let's isolate for one sex, and see if these distinctions between GroupSizes remains:

data_subset_1 = dataset[dataset['Sex']=='male']

data_subset_2 = dataset[dataset['Sex']=='female']

# Survival Probability for GroupSize < 5 Given Pclass

plt.figure(figsize=(8,5))

g = sns.barplot(x="GroupSize", y="Survived", hue="Pclass",

data=data_subset_1[data_subset_1['GroupSize'] < 5], palette='Set1')

plt.tight_layout()

plt.title('Male Passengers Only:')

leg = plt.legend(title='Pclass', loc=9, bbox_to_anchor=(1.15, 1.0))

plt.figure(figsize=(8,5))

g = sns.barplot(x="GroupSize", y="Survived", hue="Pclass",

data=data_subset_2[data_subset_2['GroupSize'] < 5], palette='Set1')

plt.title('Female Passengers Only:')

plt.tight_layout()

leg = plt.legend(title='Pclass', loc=9, bbox_to_anchor=(1.15, 1.0))

Findings:

- When we isolate for Sex, and for Pclass, the general trend of increasing survival with increasing GroupSize for GroupSizes 1 through 4 disappears! There are some small increases between GroupSize 2-3 for male passengers of Pclasses 2 and 3, but this might not be significant given the large confidence intervals.

- This tells us that the trends in GroupSize (below GroupSize=5) are predominantly derivative of the Pclass and Sex features.

- We might consider replacing GroupSize and FamilySize with a new feature called "LargeGroup" that is True for GroupSize > 4 and otherwise False.

h) The Impact of Age on Survival, Given Pclass and GroupSize¶

From earlier, we know that being a Child (Age < 16) benefits survival. We also saw that passengers aged 30-40 had a slightly higher survival rate than those aged 16-29 or 41-59. Furthermore, we saw that passengers aged 60+ had an overall lower survival rate.

Our aim now is to determine whether these differences are explainable entirely in terms of other features such as Pclass, or whether Age can offer additional, complementary predictive power.

First, let's see how Age is distributed among Pclass:

table = pd.crosstab(dataset['Pclass'], dataset['AgeBin'])

print(table)

table_fractions = table.div(table.sum(1).astype(float), axis=0)

g = table_fractions.plot(kind="bar", stacked=True)

plt.xticks(rotation=0)

plt.xlabel('Pclass', weight='bold')

plt.ylabel('Fraction of Total')

leg = plt.legend(title='AgeBin', loc=9, bbox_to_anchor=(1.1, 1.0))

table = pd.crosstab(dataset['AgeBin'], dataset['Pclass'])

print('\n', table)

table_fractions = table.div(table.sum(1).astype(float), axis=0)

g = table_fractions.plot(kind="bar", stacked=True)

plt.xticks(rotation=0)

plt.xlabel('AgeBin', weight='bold')

plt.ylabel('Fraction of Total')

leg = plt.legend(title='Pclass', loc=9, bbox_to_anchor=(1.1, 1.0))

Findings:

- Higher-class passengers were generally older.

- Pclass 1 has the highest fraction of older (41-59) or elderly (60+) passengers.

- The fraction of passengers aged 30-40 was relatively uniform across all 3 Pclasses.

- Pclass 3 had the largest proportion of young adults (16-29) and children (0-15).

These findings are interesting because they tell us that Pclass was not the underlying reason for higher survival for the 30-40 age group, and that the elderly fared poorly in spite of being predominantly Pclass=1.

It is also interesting that earlier we found the 41-59 bin to have only slightly higher survival than the 16-29 age bin, despite the former having highest proportion in Pclass=1 and the latter having highest proportion in Pclass=3, as if higher age is counteracting some of the benefit of higher class.

Let's examine the survival of different age bins within each Pclass:

plt.figure(figsize=(12,5))

g = sns.barplot(x="Pclass", y="Survived", hue="AgeBin", data=dataset, palette='Set1')

plt.tight_layout()

leg = plt.legend(title='AgeBin', loc=9, bbox_to_anchor=(1.15, 1.0))

Findings:

- Across all three Pclasses, there appears little difference between the 16-29 and 30-40 age bins.

- Within Pclasses 1 and 3, survival of the 41-59 bin appears lower than for younger passengers.

These findings suggest that the higher overall (univariate) survival we found for the 30-40 age group may simply be an artefact of (a) how those passengers are distributed among Pclasses and (b) how survival is influenced by Pclass, rather than an age of 30-40 being an advantage in of itself. However, we do see a general negative effect of age for the 41-59 and 60+ age bins.

How is this impacted by Sex?

g = sns.factorplot(x="AgeBin", y="Survived", hue="Sex", col="Pclass", data=dataset, ci=95.0)

Findings:

- Within Pclass=1, higher age is significantly worse for male survival than female survival.

- For Pclasses 2 and 3, both sexes are impacted similarly.

We can also check these trends against GroupSize, albeit by now we know that trends relating to GroupSize are predominantly derivative of the Sex and Pclass features.

table = pd.crosstab(dataset['AgeBin'], dataset['GroupSize'])

print('\n', table)

table_fractions = table.div(table.sum(1).astype(float), axis=0)

g = table_fractions.plot(kind="bar", stacked=True, colormap='coolwarm')

plt.xticks(rotation=0)

plt.xlabel('AgeBin', weight='bold')

plt.ylabel('Fraction of Total')

leg = plt.legend(title='GroupSize', loc=9, bbox_to_anchor=(1.1, 1.0))

# let's look only at GroupSizes 4 and under

subset = dataset[dataset['GroupSize'] < 5]

plt.figure(figsize=(12,5))

g = sns.barplot(x="GroupSize", y="Survived", hue="AgeBin", data=subset, palette='Set1')

plt.tight_layout()

leg = plt.legend(title='AgeBin', loc=9, bbox_to_anchor=(1.15, 1.0))

# let's make our factorplot easier to read by only considering the middle three AgeBins

subset = subset[subset['AgeBin'] != '0-15']

subset = subset[subset['AgeBin'] != '60+']

g = sns.factorplot(x="GroupSize", y="Survived", hue="AgeBin", data=subset, col='Pclass', ci=95.0)

Findings:

- 'Unfavourable' GroupSizes are not the reason for the lower survival for AgeBins 41-59 and 60+, because we see those AgeBins in fact tend to have a greater proportion of passengers in 'favourable' GroupSizes (2-4), compared to the younger 16-29 and 30-40 bins.

- In general, AgeBin 41-59 had lower survival than younger bins irrespective of Pclass and GroupSize.

Conclusions:

- Higher passenger age, specifically 41+ years, has a negative impact on survival, which is most pronounced for first-class males, and is not derivative of the Pclass composition of these Age bins (in fact, it goes against the Pclass trend, since older passengers tend to be of a higher Pclass).

i) Do Groups Tend to Survive or Perish Together?¶

Next, we set out to answer a simple question: do groups tend to survive or perish together? That is to say, if you know that at least one member of a given group has perished, can this influence the likelihood of other members of that group surviving?

To investigate such relations, we will focus our attention only on groups of size 2-4 that lie entirely within our training set (i.e. for which we have complete knowledge about survival upon which to base our statistics).

How could this potentially be useful? If we imagine treating the class probabilities predicted by our trained machine-learning model as prior probabilities in the Bayesian sense, then when making our predictions on the test set, we could consider using any available knowledge of same-GroupID member survival from the training set to obtain posterior probabilities that further refine our predictions about a test-set passenger's survival. Or, alternatively, we could simply include 'GroupNumSurvived' or a derived feature as part of our training set.

Let's first obtain the distribution of our passengers across GroupNumSurvived for different Group Sizes:

data_subset = dataset[dataset['GroupSize'] == dataset['GroupNumSurvived'] + dataset['GroupNumPerished']]

data_subset = data_subset[data_subset['GroupSize'] > 1][data_subset['GroupSize'] < 5]

table = pd.crosstab(data_subset['GroupSize'], data_subset['GroupNumSurvived'])

print(table)

table_fractions = table.div(table.sum(1).astype(float), axis=0)

g = table_fractions.plot(kind="bar", stacked=True)

plt.xticks(rotation=0)

plt.xlabel('GroupSize', weight='bold')

plt.ylabel('Fraction of Total')

plt.tight_layout()

leg = plt.legend(title='NumGroup \nSurvived', loc=9, bbox_to_anchor=(1.15, 1.0))

We see from the printed table that there are few samples for GroupSize=4 upon which to base our statistics, so we focus here only on GroupSizes 2 and 3.

If we know the survival outcome of one passenger from a given GroupID from our training set, can that provide us with any information about the survival probability for a passenger in the test set that belongs to the same GroupID? Unfortnately, for GroupSize=2, since the probability is distributed roughly evenly between 0, 1, and 2 passengers surviving, knowledge that at least one passenger has survived is not particularly helpful in determining whether the second passenger survived.

On the other hand, for GroupSize=3, the uneven distributions tell us that there is indeed some information to be gained. In this case, looking at the barplot, if at least one passenger from a given GroupID is known to have survived, then we eliminate the bottom blue segment, renormalize the green+red+purple segments to sum to unity, and compare the fraction of red+purple to the fraction of green. We compute prior and posterior probabilities explicitly below:

print('*** GROUPSIZE=2 ***')

print('\nPrior Probability of a Passenger\'s Survival:')

print('%.4f' % data_subset[data_subset['GroupSize'] == 2]['Survived'].mean())

print('\nPosterior Probability given that at least one passenger from that GroupID survived:')

print('%.4f' % (table.values[0][2]/(table.values[0][1:3].sum())))

print('\n*** GROUPSIZE=3 ***')

print('\nPrior Probability of a Passenger\'s Survival:')

print('%.4f' % data_subset[data_subset['GroupSize'] == 3]['Survived'].mean())

print('\nPosterior Probability given that at least one passenger from that GroupID survived:')

print('%.4f' % ((table.values[1][2] + table.values[1][3])/(table.values[1][1:5].sum())))

print('\nPosterior Probability given that at least two passengers from that GroupID survived:')

print('%.4f' % ((table.values[1][3])/(table.values[1][2:5].sum())))

The above affirms that for GroupSize=2, even if we know one passenger from a group has survived, this does little to change the likelihood that the other has.

For GroupSize=3, it appears that if we know one passenger has survived, the likelihood of second surviving is very high, about 26% higher than if we know nothing. However, if we already know that two members of that group have survived, the likelihood that the third survived drops dramatically.

For completeness, we check whether these general trends hold true when we consider only familes, as opposed to mixed groups:

data_subset = dataset[dataset['GroupSize'] == dataset['GroupNumSurvived'] + dataset['GroupNumPerished']]

data_subset = data_subset[data_subset['GroupSize'] > 1][data_subset['GroupSize'] < 5]

data_subset = data_subset[data_subset['GroupType'] == 'Family']

table = pd.crosstab(data_subset['GroupSize'], data_subset['GroupNumSurvived'])

print('*** FAMILY-ONLY GROUPS ***')

print(table)

table_fractions = table.div(table.sum(1).astype(float), axis=0)

g = table_fractions.plot(kind="bar", stacked=True)

plt.xticks(rotation=0)

plt.xlabel('GroupSize', weight='bold')

plt.ylabel('Fraction of Total')

plt.tight_layout()

leg = plt.legend(title='NumGroup \nSurvived', loc=9, bbox_to_anchor=(1.15, 1.0))

We observe that overall, there is a smaller fraction of Family-only groups that perish in their entirety. For GroupSize=2, there is again little difference in the fraction of groups where both members survive versus where only one survives.

1.5 - Summary of Key Findings¶

The most important features appear to be Sex, Pclass, Age, and GroupSize (for GroupSize > 4), in that order. Some of our other interesting findings, that can help guide us in feature selection and classifier creation, are as follows:

- Females: Female Pclass 1 passengers nearly all survived (95%+). Most Pclass 2 female passengers also survived (90%+). This drops sharply for Pclass 3 female passengers (to about 50%), but being a female child increases survival by roughly 5%.

- Males: Most male children in Pclass 1 and 2 survive. For Pclass 3, male children are only about 20% more likely to survive than male adults. For male adults, survival is around 40% for Pclass 1, and around 15% for Pclass 2 and 3.

- Age: Survival increases for children under 16. Going by our AgeBin feature, survival decreases for passengers of age 41+, which is most pronounced for first-class males, and is not derivative of other features.

- FamilySize and GroupSize: When we isolate for Sex and Pclass, we find that the trend of increasing survival with increasing FamilySize or GroupSize (up to a value of 4) disappears! This tells us these trends were derivative of the Pclass and Sex features. However, the sharp drop in survival for FamilySize and GroupSize of 5 or larger is not entirely explainable from just Sex and Pclass. This motivates the creation of a new feature, "LargeGroup", denoting GroupSize > 5.

- GroupType: Passengers in GroupType "Alone" fare the worst, but these also tend to be predominantly male passengers of Pclass 3, who fare poorly anyway. For GroupSize=2, we found that passengers belonging to a Family GroupType had a greater chance of survival than thoses belonging to a non-family GroupType, but as already stated, the behaviour of GroupSize below 5 appears to be mostly determined by Sex and Pclass, so this is not very interesting. If we consider only Sex and not Pclass, survival for females appears best in the 'Mixed' GroupType, closely followed by Non-Family and then Family groups. At low GroupSizes, males do significantly better in Family groups than non-family groups.

- SplitFare: We found that any predictive power of SplitFare within each Pclass appeared to be explainable by the associated GroupSizes (with GroupSizes 2, 3, and 4 having better survival) which in turn is explainable via the composition of these GroupSizes in terms of Pclass and Sex. So it would appear that SplitFare doesn't offer any advantage over Pclass.

- Embarked: We found that third-class female passengers appeared to have a much lower survival probability if they embarked at S compared to both C and Q. But it appears this is simply due to the fact that a greater proportion of these females belonged to large GroupSizes (5 and up) which we know has a negative impact on survival. Embarked itself does not appear to offer any additional predictive power, and is therefore not a very interesting feature.

2) Data Pre-Processing¶

2.1 - Checking Data Consistency¶

When training predictive models, our underlying assumption is that the data we feed our model is correct and accurate. However, this is indeed an assumption, and is not necessarily always true. Here we check our data for inconsistencies and flag entries that are suspicious.

Earlier, when defining Groups for passengers sharing the same ticket, we checked for cases where FamilySize=1 but the GroupType was labelled 'Family' due to shared surnames. We found 7 such instances, and determined that these could be passengers with second-degree relations (such as cousins), which are not counted in the ParCh or SibSp metrics.

We also checked for cases where FamilySize > 1 but GroupType = 'NonFamily'. In most cases, we found many of such cases to be an artefact of females who were travelling under a maiden name rather than their spouse's surname, and we corrected their GroupType to 'Family' accordingly.

Some additional things we can check for are:

- Agreement between Sex and Title (obviously Miss or Mrs should be female, Mr should be male)

- Agreement of Fare and Embarked port among passengers sharing a ticket

- Age/Parch consistency (see below)

a) Title/Sex Inconsistencies¶

# Checking for Title/Sex inconsistencies

flagged_ids = []

for index, row in dataset.iterrows():

if row['Title'] in ['Mr', 'Mister', 'Master']:

if row['Sex'] != 'male':

flagged_ids.append(index)

elif row['Title'] in ['Miss', 'Mrs', 'Mme', 'Mlle']:

if row['Sex'] != 'female':

flagged_ids.append(index)

print('Number of Sex/Title Inconsistencies:', len(flagged_ids))

b) Embarked/Fare Inconsistencies within Groups¶

# Checking for group inconsistencies in Embarked or Fare

flagged_group_ids = []

for (group_id, group) in dataset.groupby('GroupID'):

if len(set(group['Embarked'].values)) != 1 or len(set(group['Fare'].values)) != 1:

flagged_group_ids.append(group_id)

print('Number of Embarked/Fare Inconsistencies within shared ticket groups:',

len(flagged_group_ids))

# Exploring the flagged Embarked/Fare group inconsistencies

feature_list = ['GroupID', 'GroupSize', 'Name', 'PassengerId', 'Ticket', 'Fare', 'Embarked']

dataset[dataset['GroupID'].isin(flagged_group_ids)][feature_list].sort_values('GroupID').head(10)

Findings: